- Published on

Financial Data Weighting

- Authors

- Name

- Tails Azimuth

Table of Contents

- The Challenge of Non-IID Data in Finance

- When and Why Does the IID Assumption Fail?

- Defining Concurrency in Financial Labels

- Measuring Label Uniqueness

- Overlapping Outcomes Problem in Bootstrapping

- Solving with Sequential Bootstrapping

- Index Matrix Calculation

- Calculating Average Uniqueness

- Sequential Bootstrap Sampling

- Monte Carlo Verification of Method Effectiveness

- Generating Random Timestamps

- Monte Carlo Simulation for Sequential Bootstraps

- Multiple Iterations

- Results and Figures

- Weighting Returns in Machine Learning Models

- Calculating Sample Weight with Return Attribution

- Time-Decay of Sample Weights

- Cases to Consider for Time-Decay

- References

Financial Data Weighting

The Challenge of Non-IID Data in Finance

You might have noticed that many financial models rely on the assumption that data points are independent and identically distributed (IID). However, this is often not the case in real-world financial applications. This blog post will show you how to leverage sample weights to address these challenges.

When and Why Does the IID Assumption Fail?

Financial labels, such as returns, are often based on overlapping time intervals. This overlapping nature makes these labels non-IID. While some machine learning applications can manage without the IID assumption, most financial models struggle without it. Let's explore some techniques to mitigate this issue.

Defining Concurrency in Financial Labels

We say that two labels, and , are concurrent if they depend on the same return. To quantify this, we use an indicator function, , defined as:

The number of labels that are concurrent at time is represented by .

| Python | Julia |

|---|---|

| |

Measuring Label Uniqueness

Next, we introduce a function to measure label uniqueness at a given time . This function, denoted as , is defined as:

The average uniqueness of a label over time is given by:

In both RiskLabAI's Python and Julia libraries, you can estimate label uniqueness using specific functions.

| Python | Julia |

|---|---|

| |

Overlapping Outcomes Problem in Bootstrapping

When using bootstrapping to sample items from a set of items with replacements, there's a chance some items get selected more than once, leading to overlapping outcomes. For larger sets, the probability of not selecting a particular element converges to . As a result, only about of the observations are unique, making bootstrapping inefficient.

Solving with Sequential Bootstrapping

Sequential bootstrapping assigns different probabilities to observations, making the sampling process more efficient. The probability density for selecting observation at step is calculated using:

where and are computed using specific formulas. This approach minimizes the chance of selecting overlapping outcomes.

Index Matrix Calculation

Both Python and Julia libraries in RiskLabAI offer functions to calculate the index matrix. In Python, it's index_matrix and in Julia, it's indexMatrix.

| Python | Julia |

|---|---|

| |

Calculating Average Uniqueness

Both libraries also offer functions to calculate the average uniqueness of the samples. In Python, it's averageUniqueness and in Julia, it's also averageUniqueness.

| Python | Julia |

|---|---|

| |

Sequential Bootstrap Sampling

Finally, for sequential bootstrap sampling, the Python function is SequentialBootstrap and in Julia, it's sequentialBootstrap.

| Python | Julia |

|---|---|

| |

These functionalities are available in both Python and Julia in the RiskLabAI library. For more details, you can visit the GitHub repositories for each language.

Monte Carlo Verification of Method Effectiveness

Our goal is to assess the performance of different bootstrapping techniques. We focus on comparing the Sequential Bootstrap method with the Standard Bootstrap. We accomplish this through Monte Carlo experiments that utilize random timestamps and various other parameters.

Generating Random Timestamps

We generate random timestamps for each observation within the given parameters. The function randomTimestamp does this job in both the Python and Julia libraries of RiskLabAI.

| Python | Julia |

|---|---|

| |

Monte Carlo Simulation for Sequential Bootstraps

We run Monte Carlo simulations to compare the Sequential Bootstrap with the Standard Bootstrap using the monteCarloSimulationforSequentionalBootstraps function.

| Python | Julia |

|---|---|

| |

Multiple Iterations

For a more robust assessment, we run the Monte Carlo simulation in multiple iterations for both Sequential and Standard Bootstraps using SimulateSequentionalVsStandardBootstrap.

| Python | Julia |

|---|---|

| |

Results and Figures

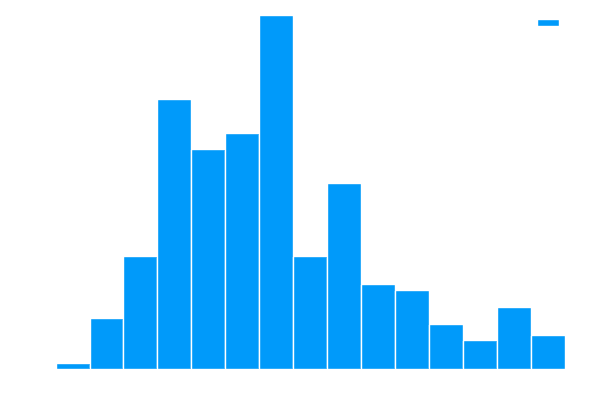

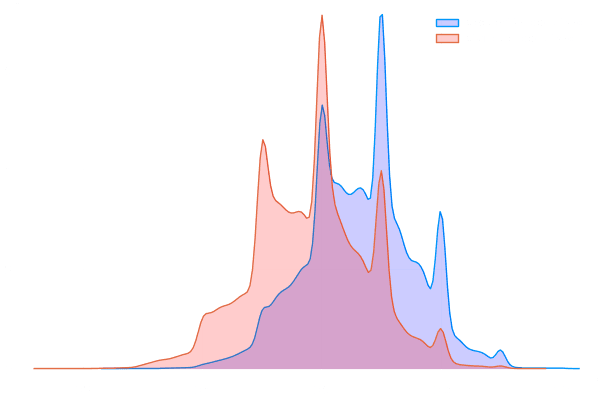

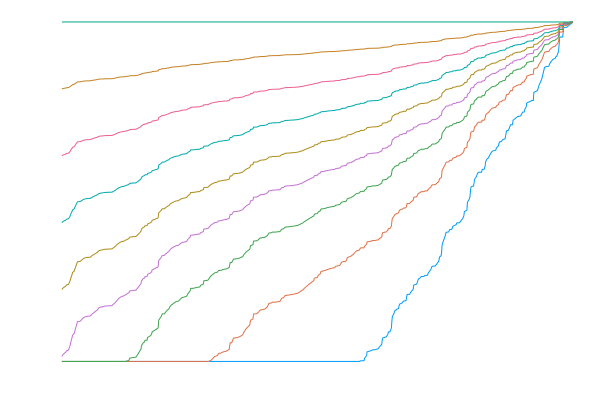

The Monte Carlo tests reveal differences between Standard and Sequential Bootstraps.

We also examine the histogram of the average uniqueness for both bootstrapping techniques. This gives us insights into how unique each sample is, allowing for better analysis.

Weighting Returns in Machine Learning Models

In machine learning for finance, it's critical to weigh data properly. Returns with high absolute values should have more weight than those with low absolute returns. The uniqueness of an observation also plays a role in determining its weight.

Calculating Sample Weight with Return Attribution

In RiskLabAI, we offer functions to handle this weight assignment. The Julia function sampleWeight and the Python function mpSampleWeightAbsoluteReturn both serve this purpose.

| Python | Julia |

|---|---|

| |

These functionalities are available in both Python and Julia in the RiskLabAI library.

Time-Decay of Sample Weights

Over time, older market data becomes less relevant. Thus, a time-decay factor is applied to the sample weights. The decay factor is defined by a user-specified parameter . The weight decay follows the formula:

where and are calculated based on boundary conditions and .

Again, RiskLabAI has built-in functions for this. The Julia function TimeDecay and its Python equivalent handle weight adjustments based on time.

| Python | Julia |

|---|---|

| |

These functionalities are available in both Python and Julia in the RiskLabAI library.

Cases to Consider for Time-Decay

- implies no decay.

- implies linear decay, with all observations still getting some weight.

- leads to weights converging to zero as they age.

- implies that the oldest observations get zero weight.

References

- De Prado, M. L. (2018). Advances in financial machine learning. John Wiley & Sons.

- De Prado, M. M. L. (2020). Machine learning for asset managers. Cambridge University Press.